The Architecture Behind NGINX

How One Server Handles Millions of Requests

When Netflix streams a movie to your living room, when Amazon processes your order, or when GitHub serves code to millions of developers, there's a good chance NGINX is quietly working behind the scenes, handling the digital traffic with remarkable efficiency.

NGINX doesn't handle this massive load by throwing more hardware at the problem or spinning up thousands of threads like traditional servers. Instead, it uses an architectural approach so elegant and efficient that a single server can handle hundreds of thousands of concurrent connections without breaking a sweat.

Understanding how NGINX works reveals fundamental principles about building scalable systems, principles that apply whether you're designing web servers, real-time applications, or any system that needs to handle high concurrency. It's a masterclass in doing more with less.

The Traditional Approach: Why More Threads Don't Always Help

Traditional web servers like Apache HTTP Server use what's called a "thread-per-request" model. The concept seems straightforward: when a user connects to your website, the server creates a dedicated thread to handle their request from start to finish.

Imagine three users visiting your website simultaneously. The server dutifully creates three threads, one for each user. If you have a dual-core processor, two threads can run immediately while the third waits its turn. This sounds manageable until you scale up.

Now imagine 10,000 users. Suddenly you have 10,000 threads competing for CPU time, each consuming memory for its stack space, and the operating system spending significant time just switching between threads, a process called context switching. What started as a simple model becomes a performance nightmare.

Each thread typically requires 2-8MB of memory just for its stack. With 10,000 concurrent connections, you're looking at 20-80GB of RAM before your application even does anything useful. Add the CPU overhead of context switching between thousands of threads, and your server becomes more busy managing threads than serving actual requests.

Apache has evolved sophisticated mechanisms to handle this—worker pools, event modules, and process limits—but the fundamental challenge remains: the thread-per-request model doesn't scale linearly with load.

This is where NGINX took a radically different approach.

The NGINX Revolution

Event-Driven Architecture

Instead of creating a new thread for every request, NGINX flips the problem on its head. It runs a small number of worker processes, typically one per CPU core, and each worker can handle thousands of concurrent connections simultaneously.

The architecture looks deceptively simple. At the top sits a master process that doesn't handle traffic directly but manages configuration, spawns worker processes, and handles graceful reloads. Below it are the worker processes, the real engines that serve requests. You might also see cache manager and cache loader processes that handle static content optimization in the background.

But the magic happens inside each worker process, where NGINX implements what's called an event loop.

Inside the Event Loop

When requests arrive, they queue up on what's called a listen socket. A socket, in networking terms, is like a communication channel between the server and a client, it stays open for the entire conversation. The listen socket maintains a backlog of new connections waiting to be accepted, like a waiting room for new customers.

Once a worker accepts a connection, it doesn't sit idle if that connection is waiting for something—like reading a file from disk or fetching data from a database. Instead, it sets the connection aside and moves on to handle other connections that are ready to proceed. When the I/O operation completes, the connection rejoins the queue of ready connections.

The worker uses an event loop to rapidly cycle through all active connections, processing whichever ones are ready. It's not managing just a few connections, a single worker can handle thousands of connections simultaneously, switching between them faster than human perception.

The Secret Weapon

epoll, kqueue

The technical breakthrough that makes this possible involves system calls like epoll on Linux and kqueue on BSD/macOS. These are efficient ways for programs to monitor thousands of network connections simultaneously without the overhead of checking each one individually.

Traditional approaches would require the server to constantly ask, "Is connection 1 ready? Is connection 2 ready? Is connection 3 ready?" thousands of times per second.

With epoll the server instead tells the operating system, "Monitor all these connections for me, and let me know when any of them have something to do."

When a client sends data, like requesting a web page, epoll immediately notifies the event loop: "Connection #451 is ready." The worker processes that specific event instantly and moves on. No time wasted checking connections that aren't ready.

This creates a remarkably efficient cycle. The event loop processes all ready connections, then immediately asks epoll, "What's ready now?" If new connections become ready even microseconds later, epoll catches them and the cycle continues. The server stays completely non-blocking and responsive.

Even if thousands of connections become active simultaneously, NGINX handles them with extraordinary efficiency because epoll tells it exactly which connections need attention, and most operations—reading HTTP headers, serving cached files, or sending responses, are lightweight and fast.

NGINX vs. Node.js

Similar Concepts, Different Implementations

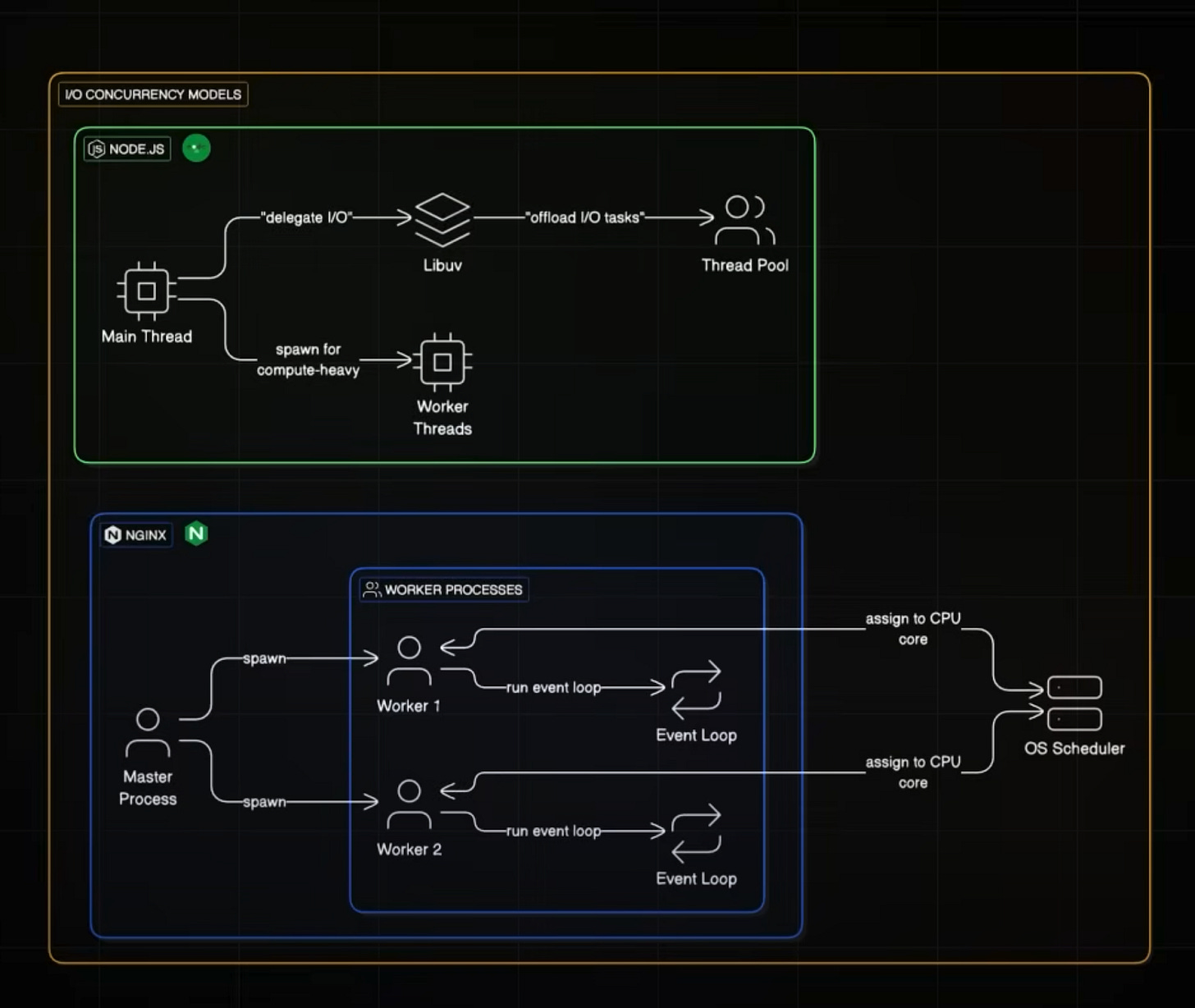

If you've worked with Node.js, this event-driven approach might sound familiar. Both NGINX and Node.js use non-blocking, event-driven architectures, but they implement them differently.

Node.js starts with a single thread running JavaScript code. It uses a library called libuv to handle I/O operations asynchronously, with a hidden thread pool managing disk reads and database connections. For CPU-intensive tasks, you need to explicitly use worker threads to avoid blocking the main event loop.

NGINX takes a more structured approach. It creates multiple worker processes, not threads, typically one per CPU core. Each worker process is independent, with its own memory space and event loop. This provides better isolation and stability since workers don't share memory and can't interfere with each other.

The distinction between processes and threads matters. Threads share memory within the same process, which can lead to race conditions and complex synchronization requirements. NGINX workers are separate processes that communicate through well-defined channels, making the system more predictable and easier to debug.

Real-World Performance

The practical implications are dramatic. A traditional threaded server might handle hundreds or low thousands of concurrent connections before performance degrades. A well-configured NGINX server can handle hundreds of thousands of concurrent connections on the same hardware.

Companies routinely run NGINX instances serving 100,000+ concurrent connections on standard server hardware.

The memory footprint remains modest because you're not allocating thread stacks for each connection. CPU usage stays efficient because there's minimal context switching overhead.

The architecture also provides natural load balancing across CPU cores. If you have an 8-core server, you typically run 8 worker processes, each pinned to a specific core. This eliminates CPU cache thrashing and provides predictable performance scaling.

Configuration and Tuning

Understanding the architecture is only half the battle. Making NGINX perform optimally requires thoughtful configuration. The number of worker processes should typically match your CPU cores. The worker_connections setting determines how many simultaneous connections each worker can handle—values of 1024-4096 are common starting points.

Operating system limits become crucial at scale. The maximum number of open file descriptors must be increased since each connection requires a file descriptor. Network buffer sizes might need tuning for high-throughput applications. The listen socket backlog should be large enough to handle traffic spikes without dropping connections.

Monitoring becomes essential. Key metrics include active connections per worker, request processing time, and system resource utilization. Unlike threaded servers where you might monitor thread pool exhaustion, with NGINX you're watching for event loop saturation and connection queue depths.

The Trade-offs: When NGINX Isn't the Answer

NGINX's architecture excels for I/O-bound workloads but isn't optimal for every scenario. CPU-intensive operations can block the event loop since workers process events sequentially.

If your application needs to perform complex calculations or data processing for each request, traditional threaded servers might provide better performance by utilizing multiple CPU cores simultaneously.

The single-threaded nature of each worker also means careful error handling is crucial. An unhandled exception or infinite loop in one request can freeze an entire worker, affecting thousands of other connections. Traditional threaded servers provide better fault isolation since problems in one thread don't directly impact others.

Memory usage patterns differ too. While NGINX uses less memory per connection, it can use more memory per worker for shared resources like SSL session caches or application state. The optimal architecture depends on your specific use case and traffic patterns.

Looking Forward

The Evolution of High-Performance Systems

NGINX's architectural principles continue influencing modern system design. Container orchestration platforms like Kubernetes use similar event-driven approaches to manage thousands of pods efficiently. Cloud computing platforms apply these concepts to handle massive numbers of virtual machines and serverless functions.

The rise of WebAssembly and edge computing creates new opportunities for NGINX-style architectures. When applications need to run close to users with minimal resource overhead, event-driven systems provide excellent performance density. The same principles that allow NGINX to handle massive web traffic enable edge applications to run efficiently on resource-constrained hardware.

Programming languages and frameworks increasingly adopt similar patterns. Go's goroutines, Rust's async/await, and even Java's Project Loom all draw inspiration from the event-driven model that made NGINX successful. The future of high-performance computing isn't necessarily about faster processors, it's about using existing resources more efficiently.

The Enduring Lessons

NGINX succeeded not by brute force but by reimagining how servers should work. Instead of fighting the limitations of traditional threading, it embraced a fundamentally different approach that turns I/O waiting time into an opportunity rather than a bottleneck.

When building systems that handle high concurrency, the architecture matters more than raw performance. Sometimes the most elegant solution isn't about doing things faster, it's about doing them differently.