Understanding MCP

How AI Assistants Connect to External Tools

AI assistants today can connect to external tools and data sources, but each system does this differently. ChatGPT uses its own plugin architecture, Claude has its tools system, and other AI assistants implement their own proprietary methods for external integrations.

MCP was introduced by Anthropic in late 2024 as an open standard that functions like a universal adapter for AI systems.

Model Context Protocol (MCP) solves this fragmentation by creating a standardized way for any AI assistant to connect with external tools, databases, APIs, and services. Instead of requiring custom integration work for each AI system, MCP provides a universal protocol that works across different AI assistants and tools.

Architecture: Client-Server Communication

MCP follows a client-server architecture similar to how web browsers communicate with websites.

The system consists of three main components:

Host Application (AI Agent): The main AI-powered system, such as a chatbot, coding assistant, or voice assistant. This includes the Large Language Model and decision-making logic that determines when to use external tools.

MCP Client: A component within the AI Agent that acts as the connector between the AI and external tools. The AI doesn’t communicate with tools directly—it uses the MCP Client to handle all external interactions. If an AI agent needs to connect to both a calendar and a database, it spawns separate MCP clients for each server.

MCP Server: The external tool or data source wrapped in the MCP interface. This could be a local program running on the same machine or a remote cloud service. MCP servers provide capabilities to the AI through three types of offerings:

Prompts: Pre-defined instructions that guide the AI on how to interact with tools efficiently

Resources: External data sources the AI can query, such as databases, APIs, or file systems

Tools & Functions: Actions the AI can perform, like sending emails, running calculations, or executing commands

Communication Protocol and Transport

MCP uses JSON-RPC 2.0 as its communication format, meaning clients and servers exchange structured JSON messages including requests, responses, and notifications. The protocol is transport-agnostic, supporting multiple communication methods:

Standard Input/Output (stdio): For local communication between processes on the same machine

Server-Sent Events (SSE): For real-time updates over web connections

WebSockets, HTTP, or custom transport layers: For cloud-based AI agents and more complex networking scenarios

The transport layer ensures that JSON-RPC messages travel reliably between clients and servers, regardless of whether they’re running locally or across networks.

Implementation: Building MCP Servers

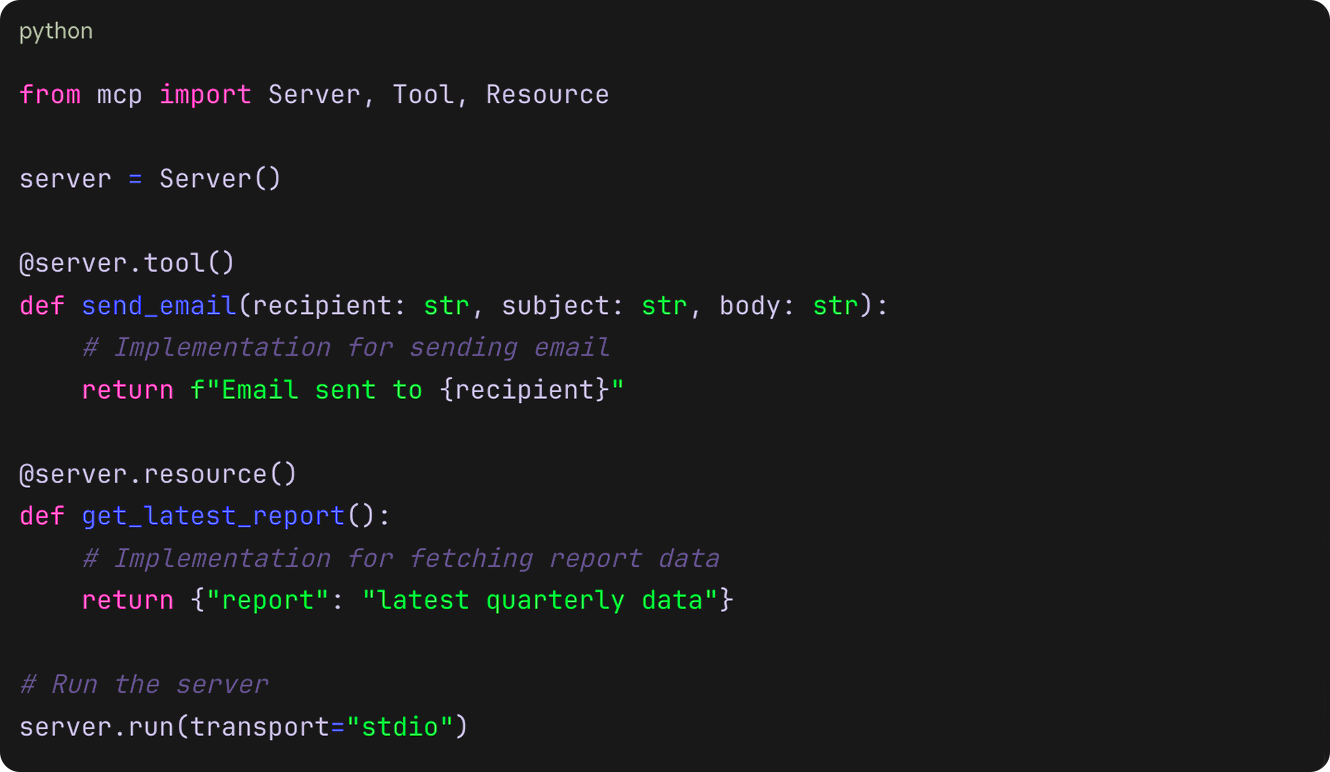

Creating an MCP server involves defining the tools and resources you want to expose to AI assistants. Using the official Python SDK, you might implement something like this

The SDK handles packaging function names, arguments, and return values into the proper MCP protocol format. When you run this server, it opens a JSON-RPC communication channel waiting for AI clients to call send_email or request get_latest_report.

Deployment and Integration

MCP servers can be deployed in various configurations. For local deployments, servers might run as background processes communicating via stdio. For remote deployments, they typically operate as microservices accessible over HTTP with Server-Sent Events.

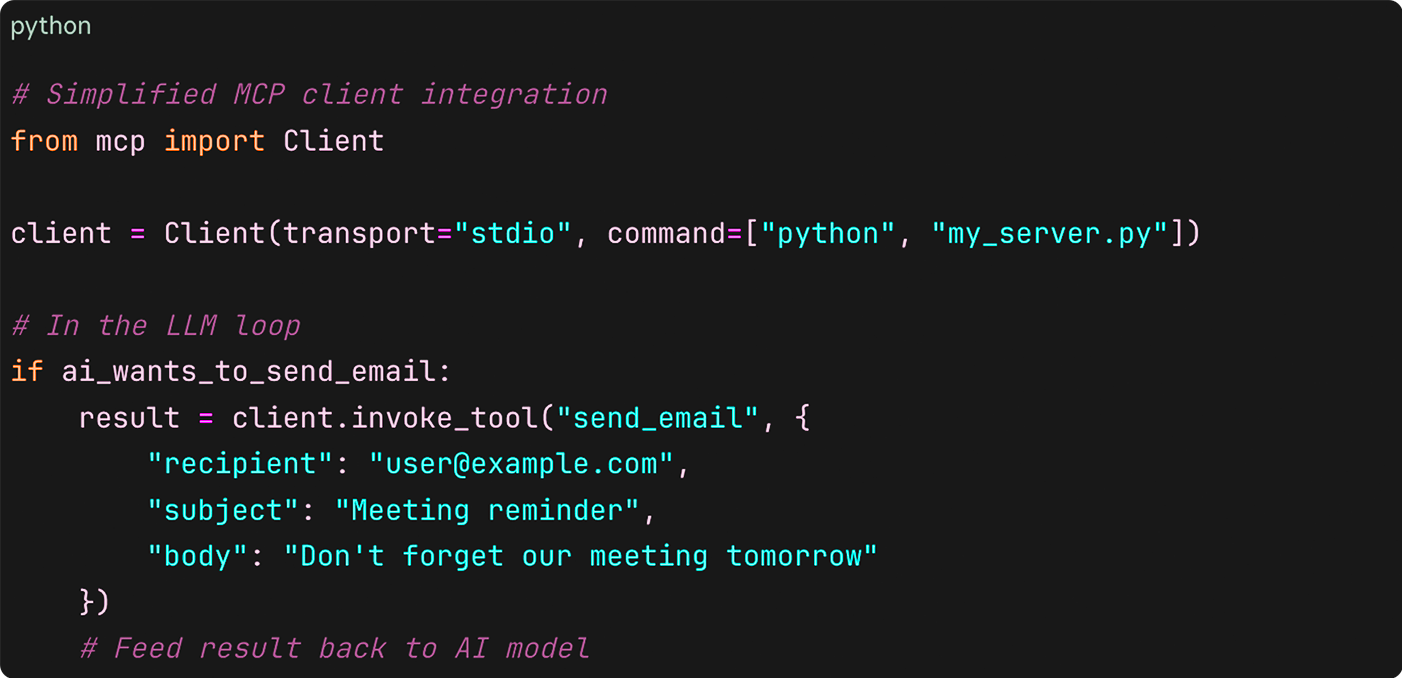

On the AI system side, integrating MCP requires implementing what’s called the “LLM loop” the process that:

Analyzes the AI model’s output

Identifies which specific MCP tool should be called

Invokes the appropriate tool through the MCP client

Feeds the tool’s response back to the AI model

Benefits and Practical Implications

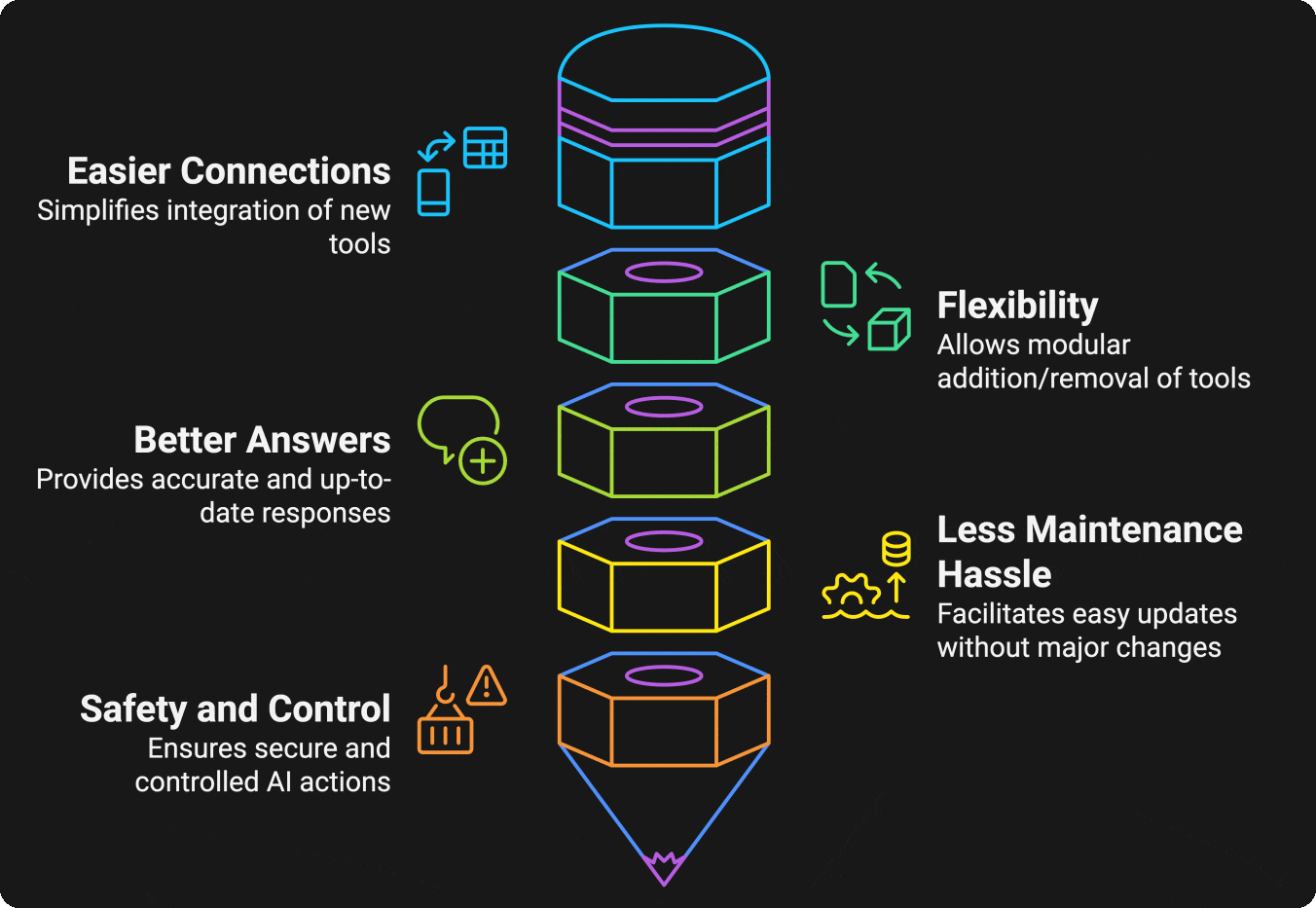

MCP transforms AI assistants into adaptable powerhouses. Like adding apps to your phone, new tools snap in seamlessly without breaking what’s already there. Instead of relying on stale training data, AI can tap into live information streams and specialized tools for razor-sharp accuracy. When things change, developers just swap out the relevant piece, no costly retraining or code rewrites needed.

MCP provides a clean framework for tool interactions, though you’ll still want dedicated security solutions to lock down access to your critical systems. (More on this in my future posts).

The Path Forward

As the protocol matures and adoption grows, we’ll see AI assistants become seamlessly integrated into existing workflows and business systems. The open nature of MCP enables tool developers and AI builders to innovate independently while maintaining interoperability, accelerating the development of specialized solutions across different domains.

In my next post, I’ll explore emerging MCP trends, compare it with alternative integration approaches, and discuss how it fits into the broader AI tooling ecosystem.

Loved it.