Inside High-Frequency Trading

How Machines Trade in Microseconds

While you're reading this sentence, somewhere in a data center near the New York Stock Exchange, a computer system has already ingested thousands of market updates, evaluated hundreds of trading opportunities, and executed dozens of trades—all in the time it takes you to blink once.

This isn't science fiction. This is high-frequency trading, where algorithms and specialized hardware battle for microsecond advantages in financial markets. Every day, these systems process millions of trades, moving billions of dollars based on mathematical models that react faster than human cognition is even possible.

But here's what most people don't realize HFT isn't just about speed for speed's sake. It's about solving a fundamental economic puzzle.

HFT is about finding tiny price inefficiencies in markets and correcting them before anyone else notices they exist.

The engineering challenge is extraordinary: building systems that can process market data, make trading decisions, and execute orders in less time than it takes light to travel from New York to Chicago.

Let me take you inside one of these systems and show you exactly how they work.

The Speed Imperative: Why Microseconds Matter

Before we dive into the technical architecture, we need to understand why speed matters so profoundly in financial markets. The answer lies in a simple principle: information has value, but only until everyone else has it too.

Consider a market-making strategy—one of the most common HFT approaches. You're essentially acting as a middleman, continuously offering to buy stocks at $9.99 and sell them at $10.01. When someone accepts your sell order, you've earned a $0.02 spread. Multiply this by thousands of stocks and thousands of transactions per second, and those pennies add up to significant profits.

But here's the catch: this only works if you can react to market changes faster than your competitors. If the stock's true value suddenly jumps to $10.50, you need to pull your $10.01 sell order immediately—before someone buys your shares at the old price and leaves you holding a loss.

In this environment, a single millisecond delay can mean the difference between profitable trading and catastrophic losses. That's why HFT firms spend millions on specialized hardware and co-locate their servers in the same buildings as stock exchanges.

They're not just buying faster computers; they're buying time itself.

This economic reality drives every architectural decision in HFT systems. Let's see how they're built to win this race against time.

The Data Stream: Capturing Market Reality at Light Speed

Every HFT system begins with the same fundamental challenge: how do you capture and process the firehose of market data that exchanges produce? We're not talking about the stock prices you see on financial websites—those are already ancient history by HFT standards.

Instead, these systems tap directly into exchange data feeds using specialized network protocols. Think of it as the difference between getting news from a newspaper versus having a direct line to the newsroom.

The NASDAQ alone produces millions of market updates per second during active trading hours—every price change, every new order, every cancellation.

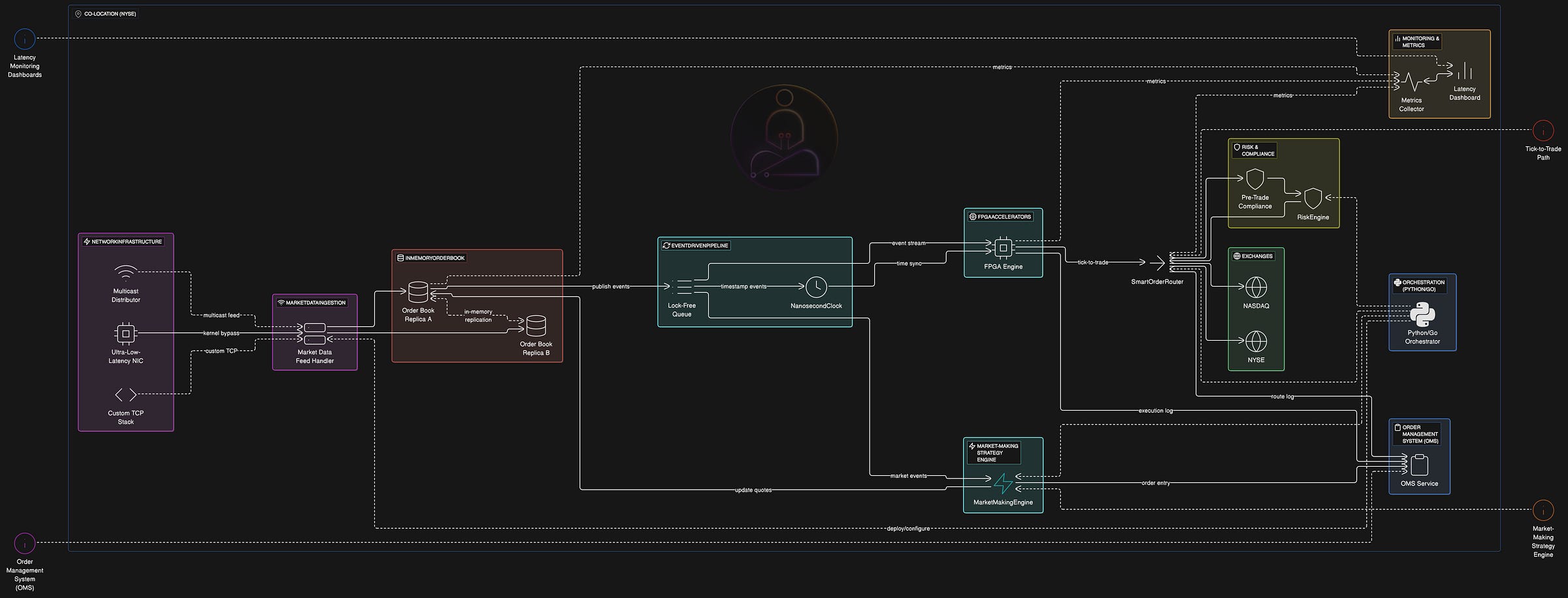

The hardware receiving this data looks nothing like a typical server. Ultra-low-latency network interface cards bypass the operating system entirely, using techniques like kernel bypass to shave off precious microseconds. Some firms use custom hardware from companies like Solarflare (now part of Xilinx) that can process network packets faster than traditional TCP/IP stacks.

But raw speed means nothing without accuracy. The market data feed handler—a critical piece of software—must parse this incoming stream perfectly. It's decoding millions of messages per second, each containing order book updates, trade executions, or administrative notifications. A single parsing error could trigger incorrect trading decisions worth millions of dollars.

The decoded market data then flows into what might be the most performance-critical component of the entire system: the in-memory order book.

The Order Book: A Real-Time Portrait of Market Intent

If market data is the nervous system of an HFT system, the order book is its memory—a constantly updated snapshot of every buy and sell order for each traded security.

Traditional databases are far too slow for this task. Instead, HFT systems maintain these order books entirely in RAM, using specialized data structures optimized for microsecond updates.

When a new bid comes in at $9.98, the system doesn't just add it to a list—it must immediately recalculate the best bid price, update depth calculations, and trigger any dependent algorithms.

The engineering challenge is immense. Consider that Apple's stock might receive thousands of order updates per second. Each update must be processed in sequence, maintaining perfect accuracy while updating derived calculations like volume-weighted average prices or liquidity imbalances. A single race condition or lock contention could introduce millisecond delays that render the entire system uncompetitive.

Most HFT firms solve this through replication—maintaining multiple synchronized copies of each order book across different CPU cores or even different servers. If one replica falls behind or encounters an error, the system can instantly fail over to another copy without missing a beat. It's like having multiple photographers capturing the same event from different angles, ensuring you never miss the critical moment.

But maintaining perfect order books is only the beginning. The real challenge is deciding what to do with this information—and doing it fast enough to matter.

The Decision Engine: Algorithms That Think in Nanoseconds

Here's where HFT systems become truly fascinating from an engineering perspective. The strategies these systems run aren't just faster versions of human trading decisions—they're fundamentally different approaches that exploit the physics of electronic markets.

Take latency arbitrage, for example. When the price of Apple stock changes on NASDAQ, it takes time for that information to propagate to other exchanges. We're talking about microseconds, but in HFT terms, that's an eternity. Systems that can detect these price discrepancies and execute trades on slower exchanges before the price updates can earn risk-free profits.

The algorithms running these strategies operate under constraints that would make most software developers nervous.

Every calculation must be deterministic and blazingly fast. Many firms use lookup tables instead of mathematical calculations, trading memory for computational speed. Where traditional software might calculate an optimal bid price using complex formulas, HFT systems often pre-compute thousands of scenarios and simply look up the answer.

But even software has its limits. For the most time-critical decisions, many HFT firms turn to hardware acceleration through FPGAs—Field-Programmable Gate Arrays that can execute custom logic at nearly the speed of light.

Hardware Acceleration: When Software Isn't Fast Enough

FPGAs represent the bleeding edge of HFT technology—custom silicon programmed to execute specific trading logic faster than any general-purpose processor ever could.

When market data arrives at an FPGA, there's no operating system overhead, no software stack, no uncertain execution time. The logic is literally wired into the hardware.

The concept is called "tick-to-trade"—the time from receiving a market data update to sending a trading order. While software-based systems might achieve tick-to-trade times of 10-50 microseconds, FPGA-based systems can respond in under a microsecond. That's faster than sound travels one foot through air.

Programming FPGAs requires a completely different mindset from traditional software development. Instead of writing sequential instructions, developers describe parallel logic circuits using hardware description languages like Verilog. Every operation must be precisely timed, and the entire data path must be optimized for the worst-case scenario.

The trade-off is flexibility. Software strategies can be updated with a simple deployment; FPGA changes require recompiling and reflashing the hardware, a process that can take hours. Many HFT firms use a hybrid approach—FPGAs for the most time-critical decisions, with software systems handling more complex strategies that can tolerate additional latency.

But speed without safety is reckless. Even microsecond trading decisions must pass through sophisticated risk management systems.

Risk Management: The Safety Net for Lightning-Fast Decisions

The same speed that makes HFT profitable also makes it potentially catastrophic. A malfunctioning algorithm could lose millions of dollars in seconds, as demonstrated by events like the 2012 Knight Capital incident, where a software glitch resulted in $440 million in losses in just 45 minutes.

This is why every HFT system includes pre-trade risk checks that operate almost as fast as the trading logic itself. These systems monitor position limits, order sizes, correlation exposures, and dozens of other risk metrics in real-time. If any parameter exceeds preset thresholds, the risk engine can instantly block orders or even shut down entire strategies.

The challenge is implementing these checks without introducing significant latency. Traditional risk management systems might query databases or perform complex calculations, but HFT risk engines must operate in microseconds. Many use in-memory position tracking and pre-computed risk scenarios, similar to the optimization techniques used in the trading algorithms themselves.

Smart order routing adds another layer of complexity. When an algorithm decides to buy 1,000 shares of Apple, the system must choose which exchange to target, what order type to use, and how to split large orders across multiple venues. The routing decision might consider current liquidity, historical fill rates, exchange fee structures, and the likelihood of adverse selection—all evaluated in real-time.

The Complete System: Orchestrating Microsecond Precision

Putting it all together, a modern HFT system resembles a Formula 1 car more than a traditional computer application. Every component is optimized for performance, with elaborate monitoring systems tracking latency at every stage of the pipeline.

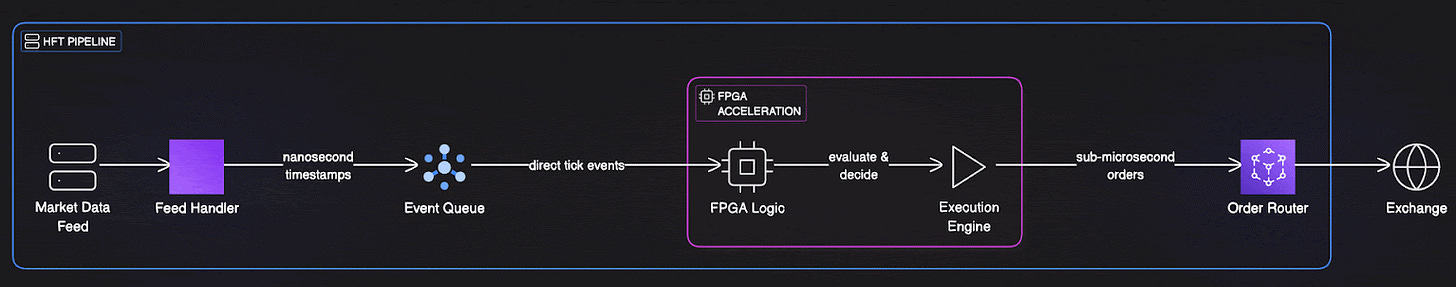

The market data flows from exchange feeds through ultra-low-latency NICs into custom feed handlers. The order books update in real-time across replicated in-memory data structures. Event-driven pipelines carry market updates to strategy engines and FPGAs, where algorithms evaluate opportunities and generate orders. Smart order routers select optimal execution venues while risk engines validate every decision.

Throughout this entire pipeline, nanosecond-precision clocks timestamp every event, allowing firms to measure and optimize performance with extraordinary precision. They track not just average latency, but 99th percentile delays, worst-case jitter, and the timing of every individual component.

The monitoring systems are equally sophisticated. Real-time dashboards track system health, strategy performance, and market conditions. Alerts trigger if any component shows signs of degradation, and automated failover systems can redirect traffic within microseconds. It's like having a pit crew for software, constantly monitoring and optimizing performance.

The Broader Implications: Technology Pushing Financial Evolution

HFT systems represent more than just fast computers—they're a window into how technology transforms entire industries. The same techniques developed for microsecond trading are now being applied to other low-latency challenges: high-frequency data analytics, real-time fraud detection, and automated industrial control systems.

The engineering lessons are profound. When every microsecond matters, you can't rely on traditional software development approaches. You must think about cache behavior, memory allocation patterns, and CPU instruction pipelines. You design for the worst case, not the average case. You measure everything and optimize relentlessly.

These systems also raise important questions about market structure and fairness.

When trading decisions happen faster than human perception, traditional concepts of market participation and price discovery evolve. Regulators struggle to monitor markets that move at machine speed, while exchanges invest billions in technology to keep pace with their most demanding customers.

Yet for all their complexity, HFT systems ultimately serve a simple economic function: they provide liquidity and help prices adjust to new information more quickly. In doing so, they've made markets more efficient, even as they've made them more complex to understand.

Looking Forward: The Race Continues

The technology arms race in HFT shows no signs of slowing. Firms are experimenting with quantum computing for certain types of optimization problems. Others are exploring direct connections to satellite networks to shave microseconds off transcontinental communication. Some are even investigating whether neutrino beams could carry trading signals through the Earth faster than fiber optic cables can carry them around its surface.

What's certain is that this industry will continue pushing the boundaries of what's technically possible. Every breakthrough in low-latency computing, network optimization, or hardware acceleration finds eager adoption in HFT systems. These firms aren't just trading securities—they're trading time itself, and they're willing to invest extraordinary resources to buy nanoseconds of advantage.

For technologists outside finance, HFT systems offer lessons in extreme performance optimization and real-time system design.

The next time you buy or sell a stock, remember that your order enters a world where computers make thousands of decisions in the time it takes you to move your finger. It's a world built on the marriage of advanced mathematics, cutting-edge engineering, and the eternal human desire to be first.