API Gateways Demystified

The One Layer That Powers Resilient Systems

In a microservices architecture, exposing services via external APIs is essential. But direct interaction between clients and services often leads to complexity, latency, and tight coupling. That’s where the API Gateway Pattern becomes critical — acting as a unified entry point that abstracts internal service boundaries while offering performance and security benefits.

The Problem with Direct Client-Service Interaction

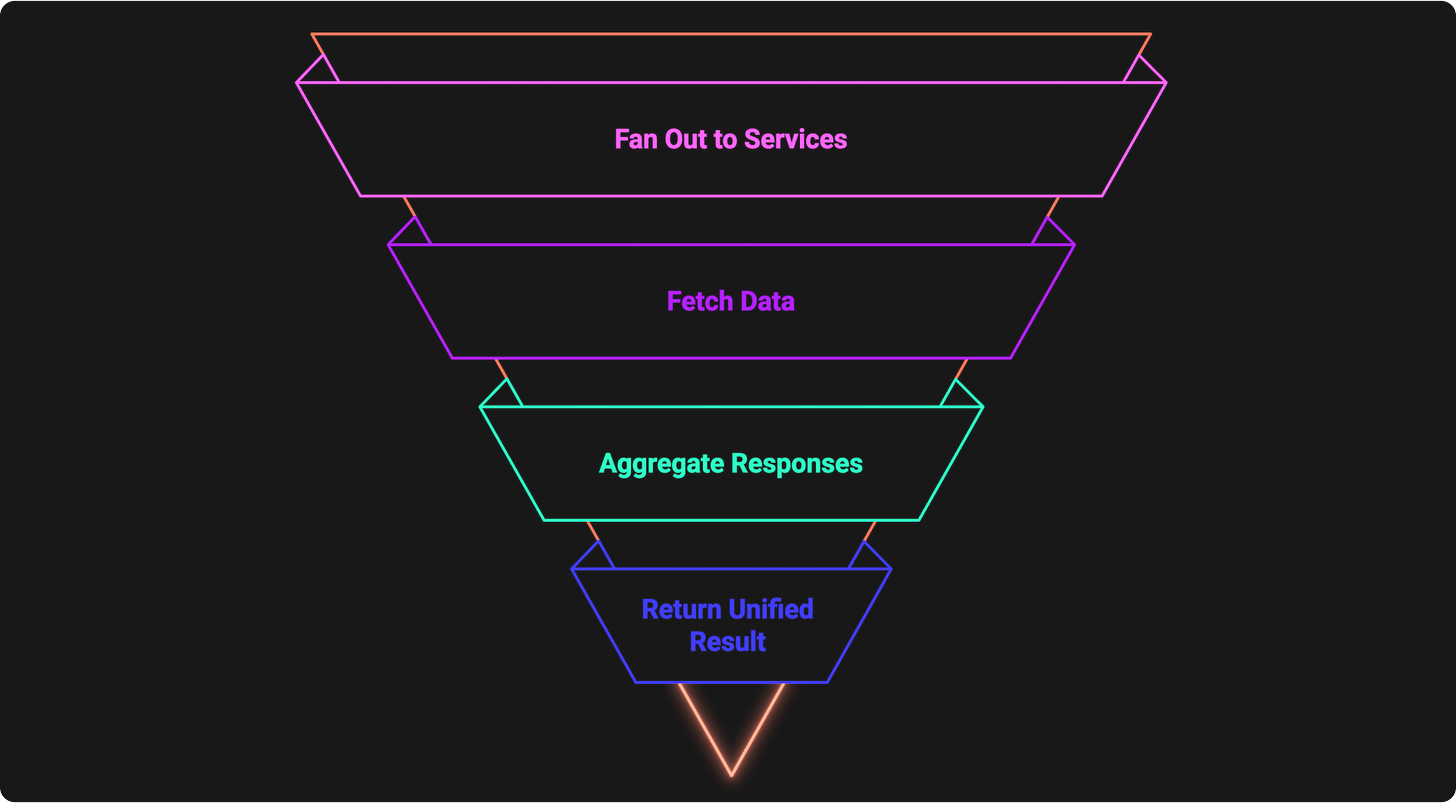

This diagram above shows exactly how one mobile request gets split across microservices — all hidden neatly behind the gateway

Let’s say your mobile app needs to show a detailed product page. Without an API Gateway, it would have to make 4 separate calls: one to the Products Service for item info, another to Inventory for stock levels, another to Cart for saved items, and yet another to Orders for purchase history.

That’s a lot of round-trips, error handling, and logic baked into the client. increasing latency and degrading user experience. The client also becomes tightly coupled to the internal service architecture — it needs to know which services to call, how to call them, and how to handle their responses.

Any change in the backend, such as merging or splitting services, risks breaking the client. Protocol mismatches can also creep in. Your backend might use gRPC or event-driven messaging internally, but clients typically expect standard HTTP/JSON interfaces.

Exposing internal protocols externally introduces unnecessary complexity and security risks.

Instead, with an API Gateway, when a client makes a single API request…

The gateway first fans out the request to multiple backend services. Each service is queried independently to fetch its respective data. Once the data is returned, the gateway aggregates the responses—combining them into a single, unified structure. Finally, the aggregated result is returned to the client as one consistent response. This process hides backend complexity, it keeps the client lean and the backend flexible. If your internal services evolve, your frontend doesn’t break — the gateway shields it from the chaos.

As microservices architectures scale, managing how clients interact with services becomes increasingly complex. An API Gateway addresses this by providing a single, unified entry point that centralizes control and reduces friction between frontend and backend layers.

Here’s how its core features help:

Request Routing ensures that incoming calls are directed to the right service without exposing internal service URLs or structure.

Protocol Mediation bridges the gap between different communication standards—allowing, for example, clients to use HTTP while services run on gRPC or AMQP.

Payload Transformation allows clients and services to communicate using different data formats, reducing tight coupling and simplifying client code.

Security and Access Control protects the backend by enforcing authentication, authorization, rate limiting, and IP filtering at the edge.

Rate Limiting prevents abuse by restricting how frequently a client can call an API within a given time window. This protects backend services from overload, mitigates DDoS risks, and ensures fair usage across consumers.

Caching improves performance by serving frequent responses directly from the gateway, reducing load on backend services.

Monitoring and Observability enable teams to track usage, debug failures, and optimize performance through centralized metrics and logging.

Response Aggregation minimizes the number of client calls by combining data from multiple services into one optimized response.

Together, these capabilities make the API Gateway not just a convenience, but a foundational component for operating microservices securely, efficiently, and at SCALE.

Implementation Approaches

Once you’ve decided to implement an API Gateway, the next step is choosing how to build it. The right approach depends on your team’s priorities—speed, control, scalability, or flexibility. Below is a comparison of common implementation strategies, including managed solutions, framework-based gateways, and GraphQL-based gateways:

Trade-offs

API Gateways offer many benefits, but they also introduce complexity and risk:

Single Point of Failure: By definition, all requests go through the gateway. If it goes down, all your APIs become unreachable, even if the backend services themselves are healthy. You must architect the gateway for high availability (e.g. multiple instances, failover).

Added Latency: Every request has an extra hop. The gateway parses, enforces policies, and potentially waits for multiple services. This adds a bit of network and processing latency. Depending on your implementation, it might be minor or noticeable.

Operational Complexity: The gateway configuration can become complex as you support more services and clients. Mistakes in routing or policies can break many APIs at once. You need good tests and monitoring on the gateway itself.

Vendor Lock-In: Using a cloud-managed gateway can tie you to that ecosystem. Migrating to a different gateway solution later may require significant rework of your API definitions and policies.

Scope Creep: There’s a temptation to cram too much logic into the gateway. Avoid making it a catch-all for business logic; it should mostly handle cross-cutting concerns.

The API Gateway is powerful, but it’s not a silver bullet. In small systems, it might be overkill. For very high-performance needs, the extra latency or cost might not justify the benefits. Always weigh the trade-offs for your project.

Conclusion

The API Gateway pattern abstracts internal service complexity, enhances performance through aggregation and caching, and centralizes control over external access.

When implemented effectively, it decouples frontend and backend teams, accelerates development, and hardens your architecture against change. Whether you’re serving mobile clients, partner APIs, or internal tools, the gateway becomes your architecture’s trusted front door.

In a distributed world, clarity matters — and the API Gateway helps keep the chaos on the inside.

I dont think, AWS API Gateway does it. AWS API Gateway it only sends or replies the response from its configured backend integration. The integration can be a Lambda Function or some service.